Return to MODULE PAGE

What is a Person?

David Leech Anderson: Author

What is a person? The English term, "person," is ambiguous. We often use it as a synonym for "human being." But surely that is not what we intend here. It is possible that there are aliens living on other planets that have the same cognitive abilities that we do (e.g. E.T: The Extraterrestrial or the famous "bar scene" from Star Wars). Imagine aliens that speak a language, make moral judgments, create literature and works of art, etc. Surely aliens with these properties would be "persons"--which is to say that it would be morally wrong to buy or sell them as property the way we do with dogs and cats or to otherwise use them for our own interests without taking into account the fact that they are moral agents with interests that deserve the same respect and protection that ours do.

Thus, one of our primary interests is to distinguish persons from pets and from property. A person is the kind of entity that has the moral right to make its own life-choices, to live its life without (unprovoked) interference from others. Property is the kind of thing that can be bought and sold, something I can "use" for my own interests. Of course, when it comes to animals there are serious moral constraints on how we may treat them. But we do not, in fact, give animals the same kind of autonomy that we accord persons. We buy and sell dogs and cats. And if we live in the city, we keep our pets "locked up" in the house, something that we would have no right to do to a person.

How, then, should we define "person" as a moral category? [Note: In the long run, we may decide that there is a non-normative concept of "person" that is equally important, and even conceptually prior to any moral concept. At the outset, however, the moral concept will be our focus.] Initially, we shall define a person as follows:

- PERSON = "any entity that has the moral right of self-determination."

Many of us would be prepared to say, I think, that any entity judged to be a person would be the kind of thing that would deserve protection under the constitution of a just society. It might reasonably be argued that any such being would have the right to "life, liberty and the pursuit of happiness."

This raises the philosophical question: What properties must an entity possess to be a "person"? At the Mind Project, we are convinced that one of the best ways to learn about minds and persons is to attempt to build an artificial person, to build a machine that has a mind and that deserves the moral status of personhood. This is not to say that we believe that it will be possible anytime soon for undergraduates (or even experts in the field) to build a person. In fact, there is great disagreement among Mind Project researchers about whether it is possible, even in principle, to build a person -- or even a mind -- out of machine parts and computer programs. But that doesn't matter. Everyone at the Mind Project is convinced that it is a valuable educational enterprise to do our best to simulate minds and persons. In the very attempt, we learn more about the nature of the mind and about ourselves. At the very least, it forces us to probe our own concept of personhood. What are the properties necessary for being a person?

Many properties have been suggested as being necessary for being a person: Intelligence, the capacity to speak a language, creativity, the ability to make moral judgments, consciousness, free will, a soul, self-awareness . . and the list could go on almost indefinitely. Which properties do you think are individually necessary and jointly sufficient for being a person?

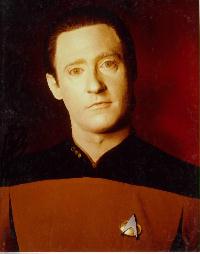

Star Trek: Is Commander Data a Person?

To begin our exploration of this question, we shall consider an interesting thesis, advanced in an episode of Star Trek: The Next Generation ("The Measure of a Man"). In that episode one of the main characters, an android called "Commander Data," is about to be removed from the Starship Enterprise to be dismantled and experimented upon. Data refuses to go, claiming to be a person with "rights" (presumably, this includes what we are calling the moral right of self-determination). He believes that it is immoral to experiment on him without his consent. His opponent, Commander Maddox, insists that Data is property, that he has no rights. A hearing is convened to settle the matter. During the trial, the attorneys consider the very same questions that concern us here:

To begin our exploration of this question, we shall consider an interesting thesis, advanced in an episode of Star Trek: The Next Generation ("The Measure of a Man"). In that episode one of the main characters, an android called "Commander Data," is about to be removed from the Starship Enterprise to be dismantled and experimented upon. Data refuses to go, claiming to be a person with "rights" (presumably, this includes what we are calling the moral right of self-determination). He believes that it is immoral to experiment on him without his consent. His opponent, Commander Maddox, insists that Data is property, that he has no rights. A hearing is convened to settle the matter. During the trial, the attorneys consider the very same questions that concern us here:

- What is a person?

- Is it possible that a machine could be a person?

In the Star Trek episode, it is assumed that anything that is "sentient" should be granted the status of "personhood" and Commander Maddox suggests that being sentient requires that the following three conditions must be met:

- Intelligence

- Self-awareness

- Consciousness

Captain Picard, who is representing Commander Data in the hearing, does not contest this definition of a person. Rather, he tries to convince the judge that Data possesses these properties (or at the least, that we are not justified in concluding that he lacks the properties).

What is intelligence?

Before turning to the specific arguments raised in the Star Trek episode, it will prove helpful to pause for a moment to consider the first property on the list, "intelligence." Could a computer be intelligent? Why or why not? A careful consideration of these questions requires a very close look both at computers and intelligence. And so we suggest that you first examine a few fascinating computer programs and think seriously about the questions, What is intelligence? and Is it possible for a machine to be intelligent? To help you reflect on these questions we recommend that you visit one of our modules on artificial intelligence.

[When you've finished with that section, return here]

Now that you have thought about "Intelligence" and pondered the possibility of "Machine Intelligence" let us turn to the Star Trek episode. You may find it interesting to note that while some people deny that machine intelligence is even a possibility, Commander Maddox (the one who denies that the android Data is a person) does not deny that Data is intelligent. He simply insists that Data lacks the other two properties necessary for being a person: self-awareness and consciousness. Here is what the character Maddox says regarding Data's intelligence.

| PICARD: | Is Commander Data intelligent? | MADDOX: | Yes, it has the ability to learn and understand and to cope with new situations. |

| PICARD: | Like this hearing. |

| MADDOX: | Yes. |

Commander Maddox, though admitting that Data is "intelligent" nonetheless denies that Data is a person because he lacks two other necessary conditions for being a person: self-awareness and consciousness. Before examining Maddox's reasons for thinking that Data is not self-aware, let us explore the concept of "self-awareness." It is important to get clear about what we mean by self-awareness and why it might be a requirement for being a person.

What is Self-Awareness?

Let us turn to the second property that the Star Trek episode assumes is necessary for sentience and personhood: "self-awareness." This has been the topic of considerable discussion among philosophers and scientists. What exactly do we mean by "self-awareness"? One might believe that there is something like a "self" deep inside of us and that to be self-aware is simply to be aware of the presence of that self. Who exactly is it, then, that is aware of the self? Another self? Do we now have two selves? Well, no, that's not what most people would have in mind.

The standard idea is probably that the self, though capable of being aware of things external to it, is also capable of being aware of its own states. Some have described this as a kind of experience. I might be said to have an "inner experience" of my own mental activity, being directly aware, say, of the thoughts that I am presently thinking and the attitudes ("I hope the White Sox win") that I presently hold. But even if we grant that we have such "inner experiences," they do not, by themselves, supply everything that we intend to capture by the term, "self-awareness." When I say that I am aware of my own mental activity (my thoughts, dreams, hopes, etc.) I do not mean merely that I have some inner clue to the content of that mental activity, I also mean that the character of that awareness is such that it gives me certain abilities to critically reflect upon my mental states and to make judgments about those states. If I am aware of my own behavior and mental activity in the right way, then it may be possible for me to decide that my behavior should be changed, that an attitude is morally objectionable or that I made a mistake in my reasoning and that a belief that I hold is unjustified and should be abandoned.

Consider the mental life of a dog, for example. Presumably, dogs have a rich array of experiences (they feel pain and pleasure, the tree has a particular "look" to it) and they may even have beliefs about the world (Fido believes that his supper dish is empty). Who knows, they may even have special "inner experiences" that accompany those beliefs. However, if we assume that dogs are not self-aware in the stronger sense, then they will lack the ability to critically reflect upon their beliefs and experiences and thus will be unable to have other beliefs about their pleasure or their supper-dish-belief (what philosophers call "second-order beliefs" or "meta-beliefs"). That is to say, they may lack the ability to judge that pleasure may be an unworthy objective in a certain situation or to judge that their belief that the supper dish is empty is unjustified.

But if that is getting any closer to the truth about the nature of self-awareness (and I'm not necessarily convinced that it is), then it becomes an open question whether being "self-aware" need be a kind of experience at all. It might be that a machine (a robot for example) could be "self-aware" in this sense even if we admit that it has no subjective experiences whatsoever. It might be self-aware even if we deny that "there is something that it is like to be that machine" (to modify slightly Thomas Nagel's famous dictum). Douglas Hofstadter offers a suggestion that will help us to consider this possibility. Now, let us turn to the Star Trek dialogue and see what they have to say about self-awareness.

| PICARD: | What about self-awareness? What does that mean? Why am I self-aware? |

| MADDOX: | Because you are conscious of your existence and actions. You are aware of your self and your own ego. |

| PICARD: | Commander Data. What are you doing now? |

| DATA: | I am taking part in a legal hearing to determine my rights and status. Am I a person or am I property? |

| PICARD: | And what is at stake? |

| DATA: | My right to choose. Perhaps my very life. |

| PICARD: | "My rights" . . "my status" . . "my right to choose" . . "my life". Seems reasonably self-aware to me. . . .Commander . . I'm waiting. |

| MADDOX: | This is exceedingly difficult. |

We might well imagine that Commander Maddox is thinking about subjective experiences when he speaks of being "conscious" of one's existence and actions. However, Picard's response is ambiguous. The only evidence that Picard gives of Data being self-aware is that he is capable of using particular words in a language (words like 'my rights' and 'my life''). Is it only necessary that Data have information about his own beliefs to be self-aware or must that information be accompanied by an inner feeling or experience of some kind? Douglas Hofstadter has some interesting thoughts on the matter.

Douglas Hofstadter on "Anti-sphexishness"

In one of his columns for Scientific American ("On the Seeming Paradox of Mechanizing Creativity"), Douglas Hofstadter wrote a thought-provoking piece about the nature of creativity and the possibility that it might be "mechanized" -- that is, that the right kind of machine might actually be creative. While creativity is his primary focus, here, much of what he says could be applied to the property of self-awareness.

The kernel of his idea is that to be uncreative is to be caught in an unproductive cycle ("a rut") which one mechanically repeats over and over in spite of its futility. On this account, then, creativity comes in degrees and consists in the ability to monitor one's lower level activities so that when a behavior becomes unproductive, one does not continually repeat it, but "recognizes" its futility and tries something new, something "creative". Hofstadter makes up a name for this repetitive, uncreative kind of behavior--he calls it sphexishness, drawing inspiration for the name from the behavior of certain kind of wasp named, Sphex. In his discussion, Hofstadter quotes from Dean Wooldridge who describes the Sphex as follows:

When the time comes for egg laying, the wasp Sphex builds a burrow for the purpose and seeks out a cricket which she stings in such a way as to paralyze but not kill it. She drags the cricket into the burrow, lays her eggs alongside, closes the burrow, then flies away, never to return. In due course, the eggs hatch and the wasp grubs feed off the paralyzed cricket, which has not decayed, having been kept in the wasp equivalent of a deepfreeze. To the human mind, such an elaborately organized and seemingly purposeful routine conveys a convincing flavor of logic and thoughtfulness -- until more details are examined. For example, the wasp's routine is to bring the paralyzed cricket to the burrow, leave it on the threshold, go inside to see that all is well, emerge, and then drag the cricket in. If the cricket is moved a few inches away while the wasp is inside making her preliminary inspection, the wasp, on emerging from the burrow, will bring the cricket back to the threshold, but not inside, and will then repeat the preparatory procedure of entering the burrow to see that everything is all right. If again the cricket is removed a few inches while the wasp is inside, once again she will move the cricket up to the threshold and reenter the burrow for a final check. The wasp never thinks of pulling the cricket straight in. On one occasion this procedure was repeated forty times, with the same result. [from Dean Wooldridge's Mechanical Man: The Physical Basis of Intelligent Life]

Initially, the sphex's behavior seemed intelligent, purposeful. It wisely entered the burrow to search for predators. But if it really "understood" what it was doing, then it wouldn't repeat the activity 40 times in a row!! That is stupid!! It is reasonable to assume, therefore, that it doesn't really understand what it is doing at all. It is simply performing a rote, mechanical behavior -- and it seems blissfully ignorant of its situation. We might say that it is "unaware" of the redundancy of its activity. To be "creative", Hofstadter then says, is to be antisphexish -- to behave, that is, unlike the sphex.

If you want to create a machine that is antisphexish, then you must give it the ability to monitor its own behavior so that it will not get stuck in ruts similar to the Sphex's. Consider a robot that has a primary set of computer programs that govern its behavior (call these first-order programs). One way to make the robot more antisphexish would be to write special second-order (or meta-level) programs whose primary job was not to produce robot-behavior but rather to keep track of those first-order programs that do produce the robot-behavior to make sure that those programs did not get stuck in any "stupid" ruts. (A familiar example of a machine caught in a rut is the scene from several old science fiction films in which a robot misses the door and bangs into the wall over and over again, incapable of resolving its dilemma -- "unaware" of its predicament.)

A problem arises, however, even if it were possible to create these programs that "watch" other programs. Can you think what it is? What if the second-order program, the "watching" program gets stuck in a rut? Then you need another program (a third-order program) whose job is to watch the "watching"-program. But now we have a dilemma (what philosophers call an "infinite regress"). We can have programs watching programs watching programs -- generating far more programs than we would want to mess with -- and yet still leave the fundamental problem unresolved: There would always remain one program that was un-monitored. What you would want for efficiency sake, if it were possible, is what Hofstadter calls a "self-watching" program, a program that watches other programs but also keeps a critical eye pealed to its own potentially sphexish behavior. Yet Hoftstadter insists that no matter what you do, you could never create a machine that was perfectly antisphexish. But then he also gives reasons why human beings are not perfectly antisphexish -- and why we shouldn't even want to be.

Can you see now why this kind of self-watching computer program might give us something like "self-awareness"? I am not saying that it is self-awareness -- that is ultimately for you to decide. But there are people who believe that human beings are basically "machines" and that our ability to be "self-aware" is ultimately the result of a complex set of computer programs running on the human brain. If one had reason to think that this was a plausible theory, then one might well think that Hofstadter's "self-watching" programs would play a key role in giving us the ability for self-awareness.

Let us return now to the discussion of Star Trek and Commander Data and the question: Is Data, the android, self-aware? Whether you think that Captain Picard has scored any points against Commander Maddox or not, Maddox seems less confident about his claim that Data lacks self-awareness than he was initially. But that doesn't mean that Maddox is giving up. There is one more property that Maddox insists is necessary for being a person: "Consciousness".

What is Consciousness?

Must an entity be "conscious" to be a person? If so, why? What exactly is consciousness? Is it ever possible to know, for certain, whether or not a given entity is conscious?

While there are many different ways of understanding the term, `consciousness,' one way is to identify it with what we might call the subjective character of experience. On this account, if one assumes that nothing could be a person unless it were conscious, and if one assumes that consciousness requires subjective experiences, then one would hold that no matter how sophisticated the external behavior of an entity, that entity will not be conscious and thus will not be a person unless it has subjective experiences, unless it possesses an inner, mental life.

Thomas Nagel discusses the significance of the "subjective character of experience" in his article, "What Is It Like to Be a Bat?" Note that Nagel is not concerned here with the issue of personhood. (He most definitely is not suggesting that bats are persons.) Rather he is interested to deny the claim that a purely physical account of an organism (of its brain states, etc.) could, even in principle, be capable of capturing the subjective character of that organism's experiences. Nagel's main concern is to challenge the claim, made by many contemporary scientists, that the objective, physical or functional properties of an organism tell us everything there is to know about that organism. Nagel says, "No." Any objective description of a person's brain states will inevitably leave out facts about that person's subjective experience--and thus will be unable to provide us with certain facts about that person that are genuine facts about the world.

One of Nagel's primary targets is the theory called, functionalism, the most popular theory of the mind of the past twenty-five years. It continues to be the dominant account of the nature of mental states help by scientists and philosophers today. Before turning to Nagel's argument, you might want to learn a bit about functionalism. If so, click here and a new window will open up with an introduction to functionalism.

Now that you have a basic understanding of the theory of functionalism, here is Nagel's reason for thinking that a functionalist account of the mind will never be able to capture the fundamental nature of what it means to be conscious.

Conscious experience is a widespread phenomenon. It occurs at many levels of animal life, though we cannot be sure of its presence in the simpler organisms, and it is very difficult to say in general what provides evidence of it. (Some extremists have been prepared to deny it even of mammals other than man.) No doubt it occurs in countless forms totally unimaginable to us, on other planets in other solar systems throughout the universe. But no matter how the form may vary, the fact that an organism has conscious experience at all means, basically, that there is something it is like to be that organism. There may be further implications about the form of the experience, there may even (though I doubt it) be implications about the behavior of the organism. But fundamentally an organism has conscious mental states if and only if there is something that it is like to be that organism--something it is like for the organism.We may call this the subjective character of experience. It is not captured by any of the familiar, recently devised reductive analyses of the mental, for all of them are logically compatible with its absence. It is not analyzable in terms of any explanatory system of functional states, or intentional states, since these could be ascribed to robots or automata that behaved like people though they experience nothing.** Footnote: Perhaps there could not actually be such robots. Perhaps anything complex enough to behave like a person would have experiences. But that, if true, is a fact which cannot be discovered merely by analyzing the concept of experience.It is not analyzable in terms of the causal role of experiences in relation to typical human behavior--for similar reasons. I do not deny that conscious mental states and events cause behavior, nor that they may be given functional characterizations. I deny only that this kind of thing exhausts their analysis. . . .. . . If physicalism is to be defended, the phenomenological features must themselves be given a physical account. But when we examine their subjective character it seems that such a result is impossible. The reason is that every subjective phenomenon is essentially connected with a single point of view, and it seems inevitable that an objective, physical theory will abandon that point of view. (Philosophical Review 83:392-393)

If an android is to be a person, must it have subjective experiences? If so, why? Further, even if we decide that persons must have such experiences, how are we to tell whether or not any given android has such experiences? Consider the following example. Assume that the computing center of an android uses two different "assessment programs" to determine whether or not to perform a particular act. In most cases the two programs agree. However, in this particular case, let's assume, the two programs give conflicting results. Further, let us assume that there is a very complex procedure that the android must go through to resolve this conflict, a procedure taking several minutes to perform. During the time it takes to resolve the conflict, is it appropriate to say that the Android feels "confused" or "uncertain" about whether to perform A? If we deny that the android's present state is one of "feeling uncertain," on what grounds would we do so?

Colin McGinn considers this question when he asks: "Could a Machine be Conscious?" Could there be something that it is like to be that machine? Very briefly, his answer is: Yes, a machine could be conscious. In principle it is possible that an artifact like an android might be conscious, and it could be so even if it were not alive, according to McGinn. But, he argues, we have no idea what property it is that makes US conscious beings, and thus we have no idea what property must be built into a machine to make it conscious. He argues that a computer cannot be said to be conscious merely by virtue of the fact that it has computational properties, merely because it is able to manipulate linguistic symbols at the syntactic level. Computations of that kind are certainly possible without consciousness. He suggests, further, that the sentences uttered by an android might actually MEAN something (i.e., they might REFER to objects in the world, and thus they might actually possess SEMANTIC properties) and yet still the android might not be CONSCIOUS. That is, the android might still lack subjective experiences, there might still be nothing that it is like to be that android. McGinn's conclusion then? It is possible that a machine might be conscious, but at this point, given that we have no clue what it is about HUMANS that makes us conscious, we have no idea what we would have to build into an android to make IT conscious.

Hilary Putnam offers an interesting argument on this topic. If there existed a sophisticated enough android, Putnam argues that there would simply be no evidence one way or another to settle the question whether it had subjective experiences or not. In that event, however, he argues that we OUGHT to treat such an android as a "conscious" being, for moral reasons. His argument goes like this. One of the main reasons that you and I assume that other human beings have "subjective experiences" similar to our own is that they talk about their experiences in the same way that we talk about ours. Imagine that we are both looking at a white table and then I put on a pair of rose-colored glasses. I say "Now the table LOOKS red." This introduces the distinction between appearance and reality, central to the discipline of epistemology. In such a context, I am aware that the subjective character of my experience ("the table APPEARS red") does not accurately reflect the reality of the situation ("the table is REALLY white"). Thus, we might say that when I speak of the "red table" I am saying something about the subjective character of my experience and not about objective reality. One analysis of the situation is to say that when I say that the table appears red, I am saying something like: "I am having the same kind of subjective experience that I typically have when I see something that is REALLY red."

The interesting claim that Putnam makes is that it is inevitable that androids will also draw a distinction between "how things APPEAR" and "how things REALLY are". Putnam asks us to imagine a community of androids who speak English just like we do. Of course, these androids must have sensory equipment that gives them information about the external world. They will be capable of recognizing familiar shapes, like the shape of a table, and they will have special light-sensors that measure the frequency of light reflected off of objects so that they will be able to recognize familiar colors. If an android is placed in front of a white table, it will be able to say: "I see a white table." Further, if the android places rose-colored glasses over its "eyes" (i.e., its sensory apparatus), it will register that the frequency of light is in the red spectrum and it will say "Now the table LOOKS red" or it might say "I am having a red-table sensation even though I know that the table is really white."

So what is the point of this example? Well, Putnam has shown that there is a built-in LOGIC when it comes to talk about the external world, given that the speaker's knowledge of the world comes through sensory apparatus (like the eyes and ears of human beings or the visual and audio receptors of a robot). A sophisticated enough android will inevitably draw a distinction between appearance and reality and, thus, it will distinguish between its so-called "sensations" (i.e., whatever its sensory apparatus reveals to it) and objective reality. Now of course, this may only show that androids of this kind would be capable of speaking AS IF they had subjective experiences, AS IF they were really conscious--even though they might not actually be so. Putnam admits this. He says we have no reason to think they are conscious, but we also have no reason to think they are not. Their discourse would be perfectly consistent with their having subjective experiences, and Putnam thinks that it would be something close to discrimination to deny that an android was conscious simply because it was made of metal instead of living cells. In effect he is saying that androids should be given the benefit of the doubt. He says:

I have concluded . . that there is no correct answer to the question: Is Oscar [the android] conscious? Robots may indeed have (or lack) properties unknown to physics and undetectable by us; but not the slightest reason has been offered to show that they do, as the ROBOT analogy demonstrates. It is reasonable, then, to conclude that the question that titles this paper ["Robots: Machines or Artificially Created Life?"] calls for a decision and not for a discovery. If we are to make a decision, it seems preferable to me to extend our concept so that robots are conscious--for "discrimination" based on the "softness" or "hardness" of the body parts of a synthetic "organism" seems as silly as discriminatory treatment of humans on the basis of skin color. [p.91]

Since Putnam thinks that there is no evidence one way or the other to settle the question, he says that we must simply decide whether we are going to grant androids the status of conscious beings. He says that we ought to be generous and do so. Not everyone would agree with Putnam on this score. Kurt Baier, for example, disagrees with Putnam, arguing that there would be good reason for thinking that the android in question was not conscious. Putnam considers two of Baier's objections and tries to speak to them. We do not have the space to consider their debate here. It is not a debate easily settled. It is interesting to note, however, that Putnam seems to have one interesting "philosopher" on his side: CAPTAIN PICARD from our Star Trek episode.

We are going to close this discussion with a dramatic scene near the end of the Star Trek episode that we've been following. In an earlier scene (with Guinan played by Whoopi Goldberg), Picard was lead to embrace a moral argument in defense of Data. This is an argument that you may find interesting because it is remarkably similar to Putnam's argument in spirit.

| PICARD: | Do you like Commander Data? |

| MADDOX: | I don't know it well enough to like or dislike it. |

| PICARD: | But you do admire him? |

| MADDOX: | Oh, yes it is an extraordinary piece of . . |

| PICARD: | . . of engineering and programming, yes you have said that. You have dedicated your life to the study of cybernetics in general and Data in particular. |

| MADDOX: | Yes. | PICARD: | And now you intend to dismantle him. |

| MADDOX: | So I can learn to construct more. |

| PICARD: | How many more. |

| MADDOX: | As many as are needed. Hundreds, thousands if necessary. There is no limit. |

| PICARD: | A single Data, and forgive me Commander, is a curiosity, a wonder even. But thousands of Datas isn't that becoming a race. And won't we be judged by how we treat that race? Now tell me Commander, what is Data? |

| MADDOX: | I don't understand |

| PICARD: | What is he? |

| MADDOX: | A machine. |

| PICARD: | Are you sure |

| MADDOX: | Yes. |

| PICARD: | You see he has met two of your three criteria for sentience. What if he meets the third, consciousness, in even the slightest degree? What is he then? I don't know. Do you? Do YOU [turning to the judge]? Well that's the question you have to answer. Your Honor, the courtroom is a crucible. In it we burn away irrelevancies until we are left with a pure product, the truth, for all time. Now sooner or later this man (MADDOX) or others like him will succeed in replicating Commander Data. Your ruling today will determine how we will regard this creation of our genius. It will reveal the kind of people we are, what he is destined to be. It will reach far beyond this courtroom and this one android. It could significantly redefine the boundaries of personal liberty. Expanding them for some, savagely curtailing them for others. Are you prepared to condemn him, and all those who come after him, to servitude and slavery? Your honor, Starfleet was founded to seek out new life -- well there it sits. Waiting. You wanted a chance to make law. Well here's your chance, make it a good one. |

| LAVOIR: | It sits there looking at me but I don't know what it is. This case has dealt with questions best left to saints and philosophers. I am neither competent or qualified to answer that. I've got to make a ruling, to try to speak to the future. Is Data a machine? Is he the property of Starfleet? No. We've all been dancing around the main question: Does Data have a soul? I don't know that he has. I don't know that I have. But I have to give him the freedom to explore that question himself. It is the ruling of this court that Lieutenant Commander Data has the freedom to choose. |

So, is Commander Data a person? We do not presume to answer that question in these pages. But we do hope that you've thought through the question a little more deeply that you had before.

From the question, "Could a machine be a person" we can move to the question "Could you be a machine?" Or, more accurately, could the very person that is you be downloaded into a computer? A brief discussion of that topic is found here:

FUNDING:

This module was supported by National Science Foundation Grants #9981217 and #0127561.