Return to MODULE PAGE

Robots Making Connections

David Leech Anderson: Author

In a previous section, we saw how a robot controlled by a digital, symbol-processing computer might "reason" about how it was going to behave. It is now time to meet a similar robot that is controlled by a nonclassical computer. It "reasons" in a quite different way. If you remember from our earlier discussion ("Two types of . . . "), non-classical computers differ from the computers with which you are most familiar (e.g. PC's and Mac's) . For this discussion, we have created an animated robot that is controlled by a computer that has several distinctive "non-classical" features. This "nonclassical" robot is intended to serve several purposes. First, it is controled by a connectionist network of a very simple sort. It is hoped that it will be of some help as you seek to understand the operation of connectionist networks (see, "Introduction to Connectionism"). Second, it is significant that our connectionist network performs the job of "reasoning" about how the robot is going to behave. While it is fairly easy to see why a connectionist network would be the right kind of computer to use with a vision for "recognizing" objects (see, "Computer Vision & Connectionist Networks" coming soon), it is less obvious that our more deliberate "reasoning processes" could be accomplished in a purely connectionist way. The WC ("water-computer") robot will give us something concrete to look at as we consider some of the more puzzling aspects of connectionist network performing tasks of higher reasoning.

The robot you are about to see is controlled by a water computer. The water computer functions in a way that is similar, in some key respects, to the way that connectionist networks function. Connectionist computer programs (artificial neural nets) were inspired by what we know of the functioning of the complex array of neurons in the brain. A good deal of progress has been made in our understanding of how neural networks in the brain process the information that our vision system uses to enable us to "see" the world. This progress has been made in part because of the success of artificial neural networks (connectionist computer programs) to "recognize" patterns in visual data and to do it in a way that is, in many respects, quite similar to the way our own brains do it.

We don't understand as well how networks of neurons perform higher cognitive functions, like the reasoning that we do when we make deliberate choices based on what we take to be "good reasons." Some people believe that when you do a math problem or when you make a deliberate choice after considering all of the reasons for and against it, that you are functioning like a symbol-processing computer, manipulating internal symbols according to rules. You have already seen our animated PC robot that functions precisely in this way. It manipulates symbols that represent the words of English and it produces strings of symbols that represent whole sentences. Those sentences are then strung together and express the "reasons" that the robot has for acting as it does. The symbol-processing model also shows exactly how those reasons lead the robot (according to precise "rules" or algorithms) to a "decision" about how to act (see "Robots Using Symbols").

Meet the WC ("water-computer") robot

You are going to meet the second of our two virtual robots. These are

not physical robots, made of metal and silicon, they are animated

robots that will operate right here on this webpage (assuming you

have a recent enough Flash plug-in for your browser). Since we are

interested in the kind of information processing that the two robots

do and not their physical make-up, it doesn't really matter what their

"bodies" are made of. We only need to know what they do

with the information they receive from the environment. And that we

will clearly see.

You are going to meet the second of our two virtual robots. These are

not physical robots, made of metal and silicon, they are animated

robots that will operate right here on this webpage (assuming you

have a recent enough Flash plug-in for your browser). Since we are

interested in the kind of information processing that the two robots

do and not their physical make-up, it doesn't really matter what their

"bodies" are made of. We only need to know what they do

with the information they receive from the environment. And that we

will clearly see.

On the outside the robots look the same (see the image to the right.) They both have a video camera that (we will assume) gives them information about their environment. They both have robotic wheels that move them about and a robotic arm to pick up objects. Not only do they look the same, but they behave in the same way as well. It is there, however, that the similarity ends. They differ quite radically on the inside. The information that they receive about the world from the video camera (for example, information about whether a door is open or closed) is "represented" in different ways. Also, the procedures by which they "decide" how to behave are distinctly different. The best way to appreciate their differences is to understand how each robot operates.

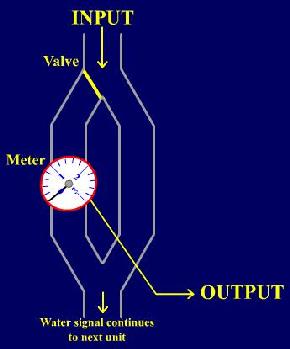

At the heart of our WC robot is what we are calling a "water-computer". It is not made of silicon chips and on-off switches the way the PC robot is built. Rather, it is made of waterpipes, valves and meters. The most important component of the water computer is what we call the "water unit" or "water node" (pictured below left). The water unit is supposed to be a very rough simulation of a unit or node in a connectionist network. And the units (or nodes) in a connectionist network are modeled on the neurons in your brain. (But be careful, here, these are only rough analogies. There are many differences between our water unit and a biological neuron.)

The

water computer processes information. It takes in information about

the world from its video camera and it carries that information to

the rest of the system using water as the medium of communication.

Water enters the unit from the top ("input") and it always

enters in the form of whole cups (8 oz.) of water. In a given time

period, there could be any number of cups of water -- 0, 1, 2, 3,

etc. -- but there will never be a half-a-cup, or a quarter. The reason

the water comes in discrete quantities (or quanta) is that the water

is supposed to be more or less like the firing of a neuron. Whether

a neuron fires or not is an all or nothing affair. It either fires

or it doesn't. And when it does fire, it always sends an electrical

signal of roughly the same strength. So if one neuron is going to

to exert a greater influence on another neuron, it doesn't accomplish

that by sending an electrical signal with a stronger electrical charge.

Rather, it increases the quantity of signals -- which is to say that

it simply fires more frequently. The same thing holds with

our water computer. A "water-signal" -- like the electrical

signal from a neuron -- comes in only one strength or size, the "one-cup"

size. If a stronger signal is going to be sent, it will be accomplished

by sending more cups of water through the unit at a time.

The

water computer processes information. It takes in information about

the world from its video camera and it carries that information to

the rest of the system using water as the medium of communication.

Water enters the unit from the top ("input") and it always

enters in the form of whole cups (8 oz.) of water. In a given time

period, there could be any number of cups of water -- 0, 1, 2, 3,

etc. -- but there will never be a half-a-cup, or a quarter. The reason

the water comes in discrete quantities (or quanta) is that the water

is supposed to be more or less like the firing of a neuron. Whether

a neuron fires or not is an all or nothing affair. It either fires

or it doesn't. And when it does fire, it always sends an electrical

signal of roughly the same strength. So if one neuron is going to

to exert a greater influence on another neuron, it doesn't accomplish

that by sending an electrical signal with a stronger electrical charge.

Rather, it increases the quantity of signals -- which is to say that

it simply fires more frequently. The same thing holds with

our water computer. A "water-signal" -- like the electrical

signal from a neuron -- comes in only one strength or size, the "one-cup"

size. If a stronger signal is going to be sent, it will be accomplished

by sending more cups of water through the unit at a time.

The influence that one unit has on another unit is not determined solely by the frequency of the signals (i.e, the number of cups sent during at particular period of time). It is also determined by how strong the connection is between the two units. The larger the connection weight between the two units, the greater the influence that each "water-signal" (each cup) will have on the unit that receives it.

The connection weight in our water computer is determined by the size of the opening on the valve on the left side pipe. This is because there is a meter on the left branch of the pipe, and it is only the water that is registered by the meter that carries any information to the other parts of the system. Any water that doesn't make it through the valve on the left side, is passed through on the right branch of the pipe to the next unit. Below is an interactive model of a water unit. Click on the numbered ovals on the left side (0, .2, .4, .6, .8, 1). You will see the size of the valve change and you will see one cup of water poured into the the unit. The larger the valve opening on the left side, the more water will flow through the right branch of the pipe and be registered by the meter. Click on all the components of the water unit and there will information about it in the dialogue box on the right.

A WATER PROCESSING UNIT

If you have read all 9 dialogue boxes in the animation, then you know most of the things that you need to know about the workings of a single water unit. There is one other thing, however, that requires further explanation. In this system, information is carried into the unit in the form of water, but information is carried out of the unit in the form of an electrical signal sent down the yellow wire that exits to the right (and is labeled, "output"). The information carried by this electrical signal could be sent to different places. In the WC robot that you will see shortly, the electrical signal sent from each unit is sent directly to the robotic wheels and the robotic arm of the robot, carrying information about how those robotic devices are supposed to move. However, if this water unit were buried deep within a connectionist network, then the electrical signal would be sent to a tank of water carrying information about how frequently cups of water should be sent to other water units to which the original unit was connected. For simplicity sake, our water computer is only showing the units that feed directly to the robotic devices.

Below you will find an interactive animation of the WC robot, a robot controled by a connectionist network made of waterpipes. Explore the operations of the WC robot. You might also want to compare this robot to the symbol-processing robot that was discussed in an earlier section (see Robots Using Symbols). After you've explored the differences between the two robots, carefully consider the questions that follow.

WATER COMPUTER ROBOT

There are a number of questions for you to consider. One question that is much debated in cognitive science is this:

Q1: When we consciously think about how we are going to behave (i.e., when we "reason"), is that process most accurately modeled by the symbol processing activity of a classical (digital) computer or is it more accurately modeled by a connectionist network?

This is a very complicated question that raises a number of subtle issues. If we are going to answer that question, there are others that may also be important to consider. Let's consider a couple more.

Q2: In the case of the symbol-processing robot, it seemed to make sense to say that robot closed the door because of the following inference rule:

If p, then q

p.

Therefore,

q.

Q3: In the symbol-processing robot, it made sense to speak of the robot's "beliefs" (even if we think they are only simulated beliefs). But is there ANY sense it which it makes sense to say that the robot has "beliefs" of any kind? If so, where is the belief stored? What exactly represents the information that "The door is open"? And even if there is an answer to that question, where is the conditional belief stored (viz. "If the door is open, then I shall shut the door")?

Finally, there is one more issue to raise. The last few questions have focused on the characteristics of connectionist networks that have convinced some people that human reasoning is only properly modeled with a symbol-processing robot, not with a connectionist robot. You might think that would provide an easy way to escape some of the troubling questions just raised. But not necessarily. While making "rational inferences" may be one important characteristic of human cognition, it is not the only one. Another feature is that humans have a rich kind of flexibility. We have the ability to learn and to adapt to new situations. This is a property that connectionist networks seem to be able to handle more readily than symbol-processing programs running on classical computers (see "Network Behavior and Learning" in the Connectionism pages).

There remains a tension, then. Some aspects of higher cognitive functioning seem to be more reasonably modeled using a symbol-processing computer; other aspects seem better suited to the connectionist networks. Might there be some sense in which they are both right? Some people think there is. Some believe that there is an important sense in which both models are right. (These are often called hybrid theories.) Others think this is just wishful thinking. Those who defend a connectionist interpretation (and reject the symbol processing model altogether) typically appeal to the biological evidence about the brain and the biological "neural networks" that seem to do virtually all of our cognitive processing. Others, who accept the symbol-processing model believe that the activity of the neurons actually implement a "symbol-system". This is a topic for another time, however (more on that coming soon)

|

DISCUSSION ASSIGNMENT: Carefully consider each of the three questions above (Q1 - Q3) and jot down notes about them to aid you in class discussion. |